RAG Systems

Retrieval-Augmented Generation systems that combine the power of LLMs with your organization's knowledge base.

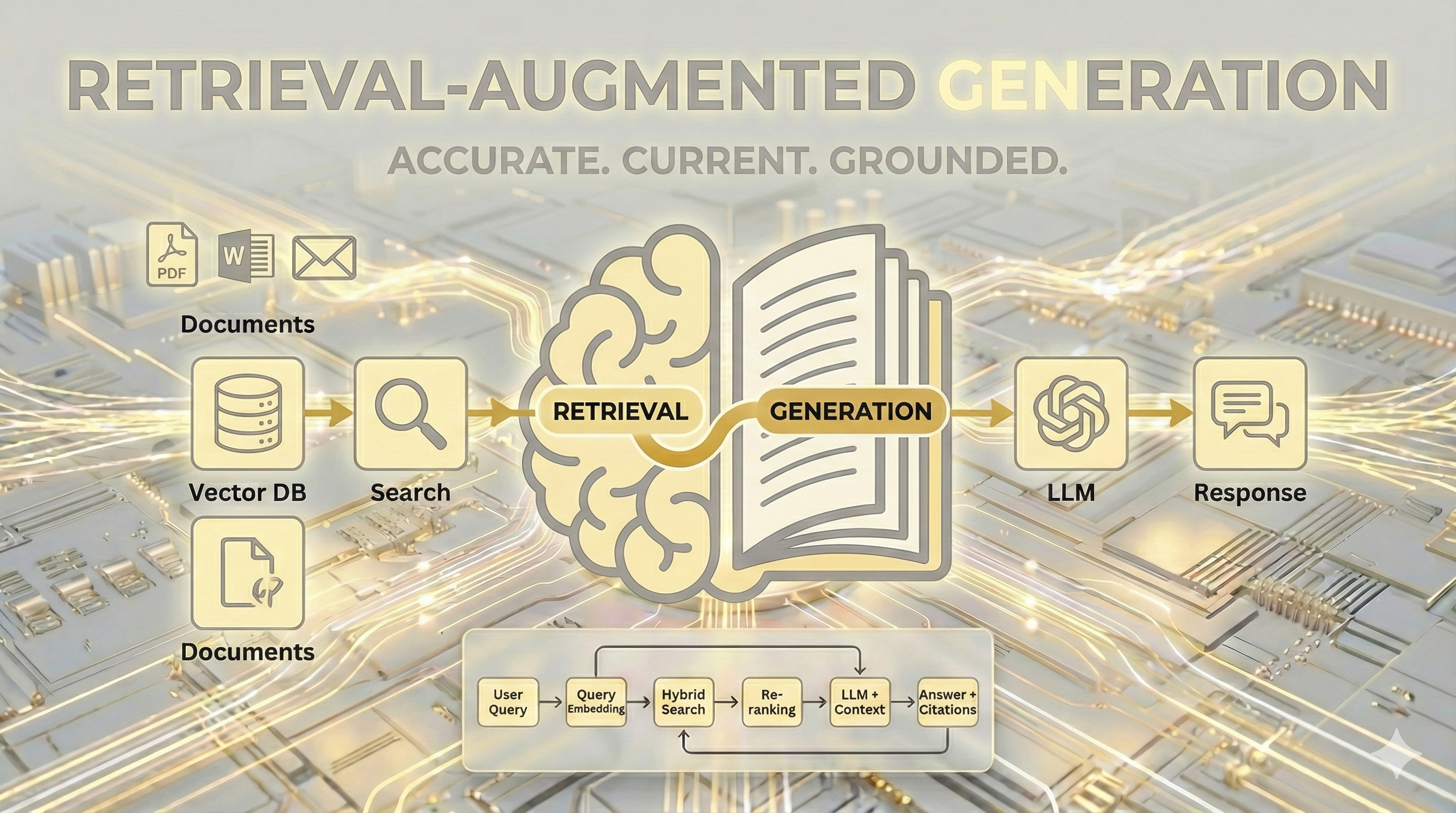

Retrieval-Augmented Generation

RAG systems combine the reasoning capabilities of Large Language Models with accurate retrieval from your knowledge base. The result: AI that provides relevant, accurate, and up-to-date information grounded in your data.

Why RAG?

- Accuracy: Answers grounded in your actual documents and data

- Currency: No retraining needed when your knowledge updates

- Transparency: Citations and source references for every answer

- Privacy: Your data stays in your infrastructure

- Cost-Effective: Leverage existing LLMs without expensive fine-tuning

RAG is Part of a Broader Solution

Beyond Simple Q&A

A production RAG system is rarely just “embed documents and query.” Successful implementations integrate:

- Data Pipelines: Continuous ingestion, processing, and indexing of new content

- Quality Assurance: Document validation, deduplication, and freshness management

- User Experience: Chat interfaces, feedback loops, and conversation memory

- Monitoring & Analytics: Usage tracking, query analysis, and retrieval quality metrics

- Access Control: Role-based permissions and document-level security

- Hybrid Approaches: Combining RAG with agents, structured data queries, and workflow automation

The RAG Process

flowchart LR

subgraph Ingestion["📥 Document Ingestion"]

A[Documents] --> B[Chunking]

B --> C[Embedding]

C --> D[(Vector DB)]

end

subgraph Query["🔍 Query Processing"]

E[User Query] --> F[Query Embedding]

F --> G{Hybrid Search}

G --> H[Semantic Search]

G --> I[Keyword Search]

end

subgraph Retrieval["📚 Context Assembly"]

H --> J[Re-ranking]

I --> J

J --> K[Top-K Selection]

K --> L[Context Window]

end

subgraph Generation["🤖 Response"]

L --> M[LLM + Context]

M --> N[Answer + Citations]

end

D --> G

style Ingestion fill:#e0f2fe,stroke:#0284c7

style Query fill:#fef3c7,stroke:#d97706

style Retrieval fill:#dcfce7,stroke:#16a34a

style Generation fill:#f3e8ff,stroke:#9333ea

Our RAG Architecture

They are all different

We build production-grade RAG systems with:

Document Processing

- Multi-format ingestion (PDF, Word, HTML, Markdown, Email)

- Intelligent chunking strategies (semantic, recursive, document-aware)

- Metadata extraction and enrichment

- Table and image extraction with OCR

- Version tracking and incremental updates

Vector Storage & Search

- PostgreSQL with pgvector for integrated solutions

- Dedicated vector databases (Qdrant, Weaviate, Pinecone, Milvus)

- Hybrid search combining semantic and keyword matching

- Multi-tenant architectures with data isolation

- Filtering by metadata, date ranges, and access permissions

Retrieval Optimization

- Query expansion and reformulation

- Cross-encoder re-ranking for precision

- Contextual compression to maximize relevant information

- Parent-child document retrieval for full context

- Multi-step retrieval for complex queries

Response Generation

- Multiple LLM support (OpenAI, Anthropic, local models)

- Structured output generation (JSON, tables, summaries)

- Source citation with page/section references

- Confidence scoring and uncertainty detection

- Streaming responses for better UX

Current Trends & Advanced Techniques

The RAG landscape is evolving rapidly. We implement cutting-edge approaches:

Agentic RAG

Combining retrieval with autonomous agents that can:

- Decide when to retrieve vs. use existing context

- Perform multi-hop reasoning across documents

- Call external tools and APIs when needed

- Self-correct based on retrieved information

Graph RAG

Enhancing retrieval with knowledge graphs:

- Entity extraction and relationship mapping

- Graph-based context expansion

- Combining structured and unstructured knowledge

- Better handling of complex, interconnected topics

Adaptive Chunking

Moving beyond fixed-size chunks:

- Semantic chunking based on content structure

- Document-aware splitting (respecting sections, paragraphs)

- Late chunking with contextualized embeddings

- Dynamic chunk sizing based on content density

Evaluation & Optimization

Systematic quality improvement:

- Automated retrieval quality metrics (MRR, NDCG, recall)

- LLM-as-judge for answer quality assessment

- A/B testing of retrieval strategies

- Continuous feedback integration

Project Highlights

Internal Documentation Center

Case Study

Challenge: A client needed to make thousands of technical PDF documents searchable and queryable through natural language, enabling engineers to quickly find relevant specifications, procedures, and guidelines.

Solution:

- Automated PDF ingestion pipeline with intelligent text extraction

- Table and diagram recognition for technical content

- Semantic search across the entire document corpus

- Conversational interface with source citations

- Role-based access control for sensitive documents

Results: Reduced documentation lookup time from hours to seconds, with accurate source references for compliance requirements.

Intelligent News & Trend Analysis

Case Study

Challenge: A client needed to stay informed about industry developments but was overwhelmed by the volume of newsletters, alerts, and updates arriving via email from various sources.

Solution:

- Automated email ingestion from curated source lists

- Content extraction and categorization

- Daily trend reports with key insights and summaries

- Growing knowledge base of historical intelligence

- Conversational interface to query the accumulated knowledge

- Topic tracking and alert generation for specific interests

Results: Transformed information overload into actionable intelligence, with executives receiving concise daily briefings and the ability to deep-dive into any topic through natural conversation.

Use Cases

- Enterprise Knowledge Base: Internal documentation, policies, procedures

- Customer Support: Product manuals, FAQs, troubleshooting guides

- Legal & Compliance: Contracts, regulations, case law

- Research & Intelligence: Scientific papers, market reports, competitive analysis

- Technical Documentation: API docs, specifications, engineering standards

- HR & Operations: Employee handbooks, onboarding materials, process guides

Integration Options

We integrate RAG into your existing workflows:

- REST API Endpoints: Direct integration with your applications

- Slack/Teams Bots: Knowledge access in your communication tools

- Web Chat Interfaces: Embeddable widgets for websites and portals

- Email Assistants: Automated responses and research support

- Mobile Apps: On-the-go access to your knowledge base

- n8n/Zapier Workflows: Automated knowledge-driven processes

Technology Stack

| Component | Options |

|---|---|

| Embeddings | OpenAI, Cohere, BGE, E5, local models |

| Vector DB | pgvector, Qdrant, Weaviate, Pinecone, Milvus |

| LLMs | GPT-4, Claude, Llama, Mistral, local deployment |

| Orchestration | LangChain, LlamaIndex, custom pipelines |

| Infrastructure | Docker, Kubernetes, serverless |

Ready to unlock your organization’s knowledge? Let’s discuss your RAG implementation.

Related Content

Codex-V Knowledge Engine

Your Personal Technical Knowledge Engine Codex-V is a local-first knowledge management system …

Building RAG Systems for Production: Lessons Learned

Retrieval-Augmented Generation (RAG) has become the standard approach for building AI systems that …

AI Agents

Autonomous AI Agents AI agents go beyond simple question-answering. They can reason about problems, …