Codex-V Knowledge Engine

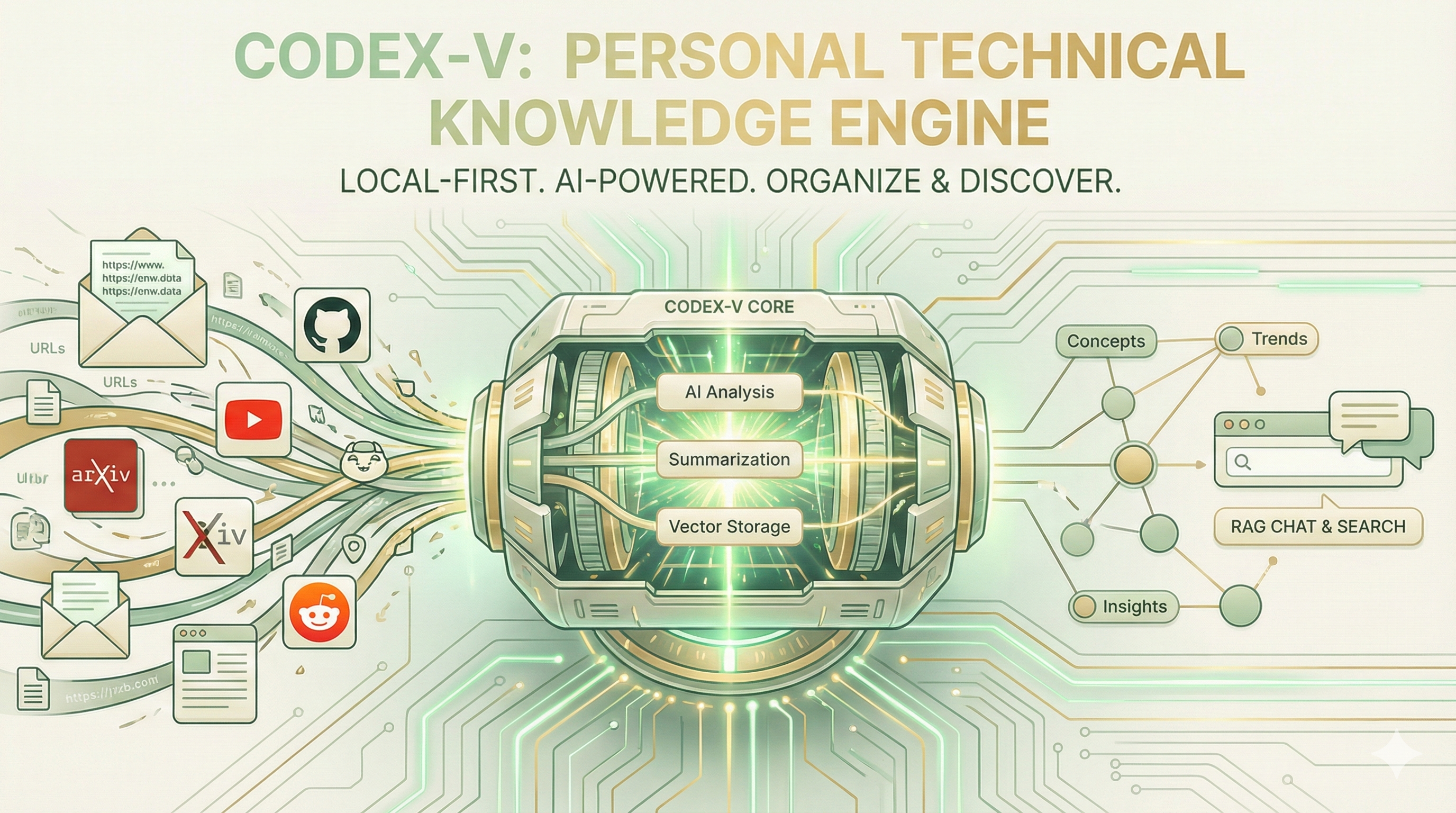

A local-first, privacy-centric knowledge management system that transforms scattered technical content into an intelligent, searchable second brain.

Your Personal Technical Knowledge Engine

Codex-V is a local-first knowledge management system designed for software engineers and technical professionals. It automatically ingests, analyzes, and organizes technical content from diverse sources into a searchable knowledge base with AI-powered chat capabilities.

The Problem We Solve

Information Overload

- Bookmarks saved but never revisited

- Links emailed to yourself, buried in your inbox

- YouTube tutorials watched but details forgotten

- Research papers downloaded but never searchable

- GitHub repos starred but their purpose forgotten

Codex-V transforms this chaos into an intelligent, queryable knowledge base.

How It Works

flowchart LR

subgraph Discovery["1. Discovery"]

A[Email with URLs] --> B[IMAP Polling]

C[Manual URL] --> B

B --> D[URL Classification]

end

subgraph Extraction["2. Extraction"]

D --> E{Source Type}

E -->|GitHub| F[API + README]

E -->|YouTube| G[Transcript]

E -->|ArXiv| H[LaTeX/PDF]

E -->|Blog| I[Readability]

E -->|Reddit| J[Post + Comments]

end

subgraph Analysis["3. AI Analysis"]

F & G & H & I & J --> K[Local LLM]

K --> L[Summary]

K --> M[Concepts]

K --> N[Relevance]

end

subgraph Storage["4. Knowledge Base"]

L & M & N --> O[PostgreSQL]

O --> P[pgvector]

P --> Q[Semantic Search]

end

style Discovery fill:#e0f2fe,stroke:#0284c7

style Extraction fill:#fef3c7,stroke:#d97706

style Analysis fill:#dcfce7,stroke:#16a34a

style Storage fill:#f3e8ff,stroke:#9333ea

The Workflow

- Send yourself an email with links to interesting content

- Codex-V polls your inbox and discovers new URLs

- Specialized extractors fetch content based on source type

- Local LLM analyzes and generates summaries, concepts, relevance scores

- Content is embedded and stored in a vector database

- Search semantically or chat with your knowledge base

Key Features

Multi-Source Ingestion

Supported Sources

| Source | Extraction Method | Special Features |

|---|---|---|

| YouTube | Transcript API / Whisper | Timestamped chunks, deep links to moments |

| GitHub | API + selective cloning | README, stars, language, file analysis |

| ArXiv | LaTeX source or PDF | Math preservation, author/abstract extraction |

| JSON API | Post + top comments with consensus analysis | |

| Blogs | Playwright + Readability | Cookie wall bypass, paywall support via cookies |

| Documentation | Browser rendering | Full page extraction with code blocks |

AI-Powered Analysis

Every piece of content is analyzed by a local LLM to generate:

- Concise Summary: 2-3 sentences capturing the key value

- Concept Tags: Automatically extracted topics and technologies

- Relevance Score: How well it matches your interests

- Key Insights: Actionable takeaways

Semantic Search & RAG Chat

Find by Meaning, Not Keywords

Search: “How do I implement mutex locking in Go?”

Chat Response: “Based on your saved YouTube video ‘Concurrency in Go’ and the GitHub repo ‘awesome-go-patterns’, mutex locking involves…”

[Sources cited with links and timestamps]

Trend Detection & Insights

Codex-V analyzes your ingestion patterns to identify:

- Emerging Interests: Topics appearing more frequently

- Key Themes: Dominant concepts in your recent reading

- Learning Patterns: How your focus evolves over time

Email Group Detection

When an email contains multiple related URLs (paper + code + demo), Codex-V automatically groups them together, making it easy to see the full context of related resources.

Privacy-First Architecture

Your Data Never Leaves Your Machine

Local-Only Components

- PostgreSQL + pgvector: All data stored locally

- Local LLM via Ollama/LM Studio: No API calls to external services

- Local Embeddings: sentence-transformers running on your machine

- Desktop App: Native Wails application, no browser required

Optional External Services

- Authenticated scraping: Uses your browser cookies for paywalled content

- Publishing LLM: Optional high-quality model for content generation

Technology Stack

| Component | Technology | Purpose |

|---|---|---|

| Frontend | Wails v2 + Vue 3 + TailwindCSS | Native desktop application |

| Backend | Python FastAPI | API, content extraction, LLM orchestration |

| Database | PostgreSQL 17 + pgvector | Storage and vector search |

| LLM | Ollama / LM Studio | Local inference (Nemotron, Qwen, Llama) |

| Embeddings | sentence-transformers | all-MiniLM-L6-v2 (384 dimensions) |

| Transcription | faster-whisper | Local GPU-accelerated transcription |

| Browser | Playwright | Authenticated and JavaScript-rendered content |

Content Studio

Beyond knowledge management, Codex-V includes a Content Studio for technical content creators:

- Topic Proposals: AI analyzes recent ingestions and suggests blog/post topics

- Content Generation: Creates publish-ready blog posts and social threads

- Source Attribution: Links back to the knowledge that inspired the content

- Publishing Pipeline: Integration with Hugo, social platforms, and more

Use Cases

For Software Engineers

- Capture interesting repos, tutorials, and documentation

- Search your personal knowledge during development

- Track emerging technologies in your field

For Researchers

- Organize papers, preprints, and technical blogs

- Query across your reading history

- Generate literature review summaries

For Technical Writers

- Build a knowledge base of source material

- Generate content ideas from recent reading

- Maintain attribution to original sources

For Team Leads

- Curate learning resources for your team

- Track industry trends and emerging technologies

- Build institutional knowledge repositories

Getting Started

Quick Setup

Prerequisites

- Docker Desktop - For PostgreSQL with pgvector

- Ollama -

ollama pull nemotronor your preferred model - Python 3.11+ - For the backend services

Installation

| |

Configuration

- Add your email source in Settings > Email Sources

- Configure LLM endpoint (Ollama default:

http://localhost:11434/v1) - Send yourself an email with interesting URLs

- Watch Codex-V build your knowledge base

Roadmap

Current Features

- Multi-source ingestion (YouTube, GitHub, ArXiv, Reddit, blogs)

- Local LLM analysis and summarization

- Semantic search with pgvector

- RAG chat with source citations

- Trend detection and daily insights

- Content Studio for topic proposals

Planned Features

- Browser extension for one-click capture

- Mobile companion app for search

- Team collaboration features

- Export to Obsidian/Notion formats

- Advanced graph visualization

Ready to build your second brain? Contact us for a demo or check out the GitHub repository.

Related Content

RAG Systems

Retrieval-Augmented Generation RAG systems combine the reasoning capabilities of Large Language …

Building RAG Systems for Production: Lessons Learned

Retrieval-Augmented Generation (RAG) has become the standard approach for building AI systems that …

Local LLMs

Local LLM Deployment Keep your data private with on-premise language models. We help organizations …